The Future of Generative AI – From Models to Emergent AI

Generative AI

Generative AI (GenAI) is a game-changer in the IT landscape. It gained widespread recognition with the success of ChatGPT in 2022. AI has always been a strategic asset for IT, but now, generative AI empowers users to leverage it to deliver value quickly. The commercial potential is immense, and many forward-thinking organizations are already experimenting with their generative AI strategy.

To capitalize on these opportunities, IT executives are engaging in dialogues around how this technology can transform their business. They aim to seize opportunities and enhance their ability to provide value and gain a competitive advantage. Enterprises have concerns about hallucination (inaccurate or harmful information), data asset mismanagement, and privacy policy issues. They seek robust strategies to address them and achieve faster outcomes. These situations demand some tenets to guide IT executives to craft a persuasive generative AI strategy. Considering all these factors, we have identified three key questions that can shape an enterprise’s generative AI strategy:

- What are the implications of generative AI on critical areas of IT?

- How do organizations decide the right implementation approach – fine-tune vs. train custom models?

- How will generative AI evolve into data-centric AI?

What are the implications of generative AI on the critical areas of IT?

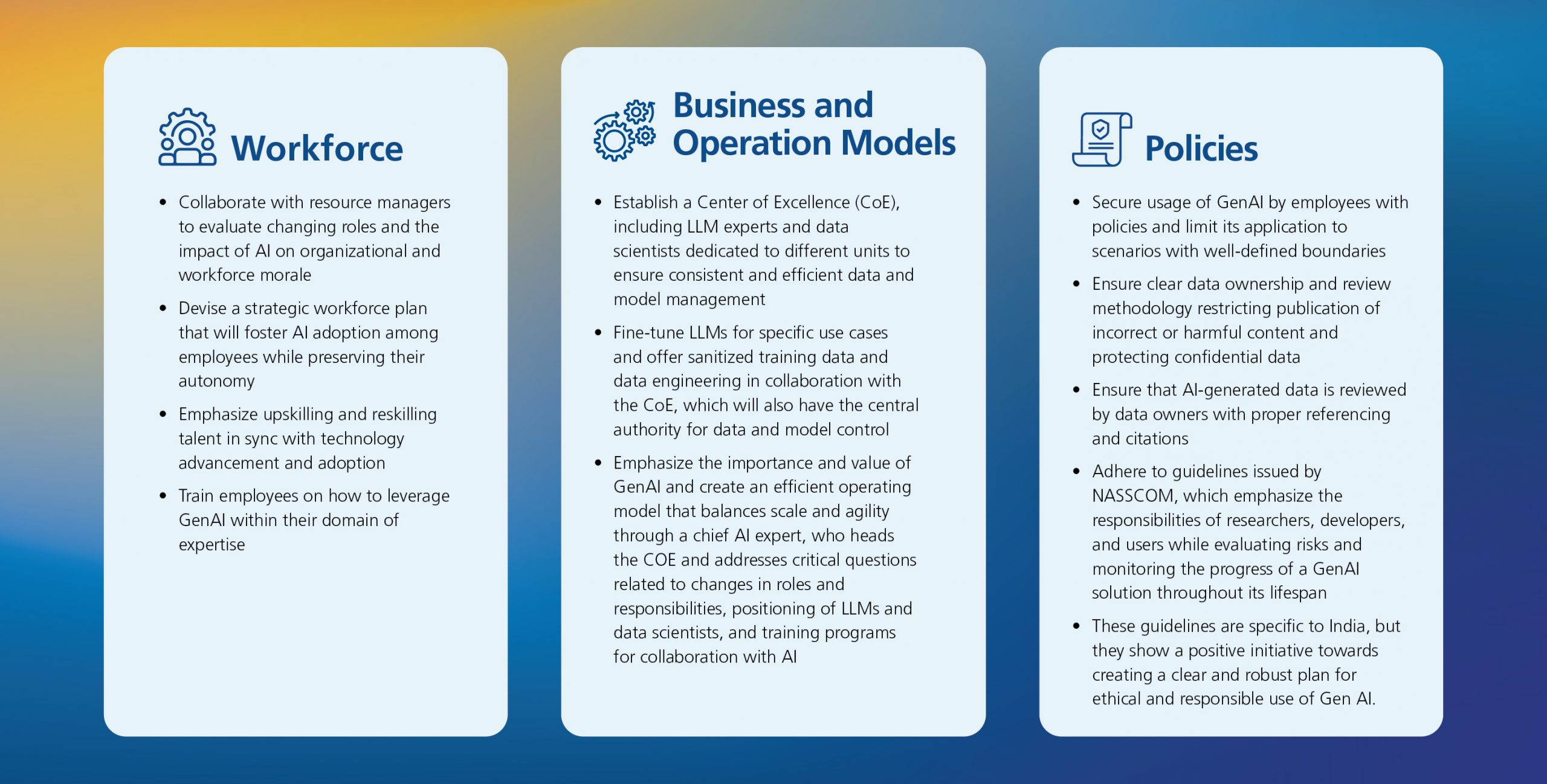

Since generative AI is so versatile, IT organizations want to leverage the technology for efficiency gains and overcome technical constraints. Before embarking on that journey, they must comprehend the implication of generative AI on the workforce, business models, and data governance policies. They must also understand what kind of innovation will be required to address them. Let’s discuss the implications across the following critical areas and how they can shape your generative AI strategy:

- Workforce

Generative AI will redefine the job roles and skill sets of individuals required to synergize with AI. Software engineers can leverage generative low-code platforms to streamline coding workflows, debug, and test but may depend on AI, neglecting the code quality. NYU’s Center for Cybersecurity reports that AI-generated code is often unreliable and insecure, posing a serious security risk as most employees cannot identify code vulnerabilities. Generative AI will advance to review codes and create complex algorithms using prompt engineering and improved recommendation engines. This will disrupt the job roles of software developers and demand new skills and competencies.

- Business and operation model

This technology will enable organizations to use their data assets, workflows, and interfaces in new ways. Enterprises can do this by experimenting with generative AI extensions on their products and value propositions. They will aim to democratize the adoption of generative tools by integrating them with cloud services. New business models, such as inference-as-application Programming Interface (API), End-to-End (E2E) generative services, and vertical-specific generative solutions that embed generative AI as a feature or enhancement, may emerge in the next few years.

- Policies

Generative AI can produce inaccurate or harmful information, known as “hallucination.” This technology can pose serious risks, such as violating intellectual property rights, exposing confidential data, and creating an unintended functionality known as capability overhang.

Proactive steps:

Figure 1: Proactive steps to mitigate genAI implications

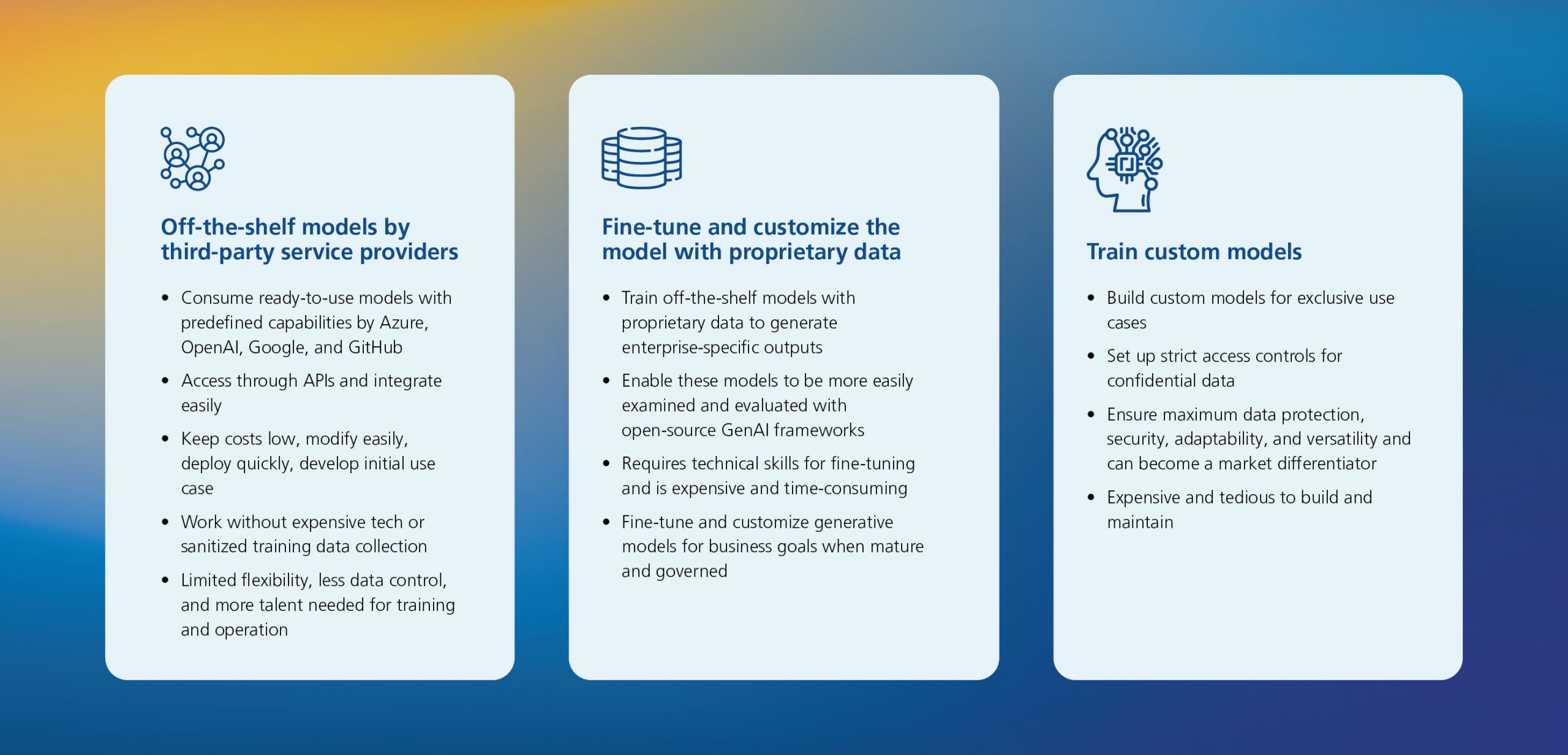

How do organizations decide on the right implementation approach?

Organizations should collaborate with third-party providers and experiment with off-the-shelf models before choosing the right implementation approach for their generative AI strategy. This will help them understand the capabilities and limitations of this technology. It will also help to identify the best use cases and scenarios for their business goals. They can also leverage the expertise and experience of third-party providers to develop and deploy generative AI solutions and avoid potential risks and pitfalls. They can also evaluate the performance and accuracy of generative AI models and weigh their benefits and challenges. These evaluations allow them to choose the right implementation approach that aligns with their goals, resources, and constraints. We have identified three significant methods to aid IT leaders choose strategically between fine-tuning off-the-shelf models and developing and training custom models.

Figure 2: Gen AI implementation approach

How will generative AI evolve into data-centric AI?

Currently, the world relies on pre-trained models capable of performing generic tasks. These models can be fine-tuned for specific tasks or domains, such as pattern recognition and sentiment analysis. However, this is not enough for a robust generative AI strategy. These models are still limited by the quality and quantity of training data supplied, availability of computational resources, and expertise to use and maintain them. So, organizations looking to introduce generative models in domain-specific traditional AI applications will need specialized pre-trained models. Considering the complexity of models, lack of data privacy norms, and hallucination-related problems, it will take some time for such models to hit the market. The healthcare industry has already taken the first step in this direction. They are developing specific generative models for specific use cases like drug discovery and molecule engineering.

Organizations are stacking prompt engineering on top of pre-trained generic models to develop specialized models. Prompt engineering allows them to leverage the power of pre-trained models without modifying or retraining them from scratch. It also enables them to create more interactive and engaging applications to communicate with users in their natural language. This is just the beginning of the future of gen AI.

Once these specialized generative models start to get implemented in traditional AI applications, the newer ecosystem would need to take in a lot of data. This data will be used to generate output straight out of requirements. Implementing organizational data from various domains as connected neural networks will be needed to fulfill the training requirements of such complex generative models. This will create data-centric generative AI, which focuses on improving and enriching training data.

One way to achieve data-centric genAI and shape its future is to create an interconnected neural layer between the pre-trained models. The neural layer should connect with the domain-specific application layer. This layer would allow generic pre-trained models to communicate and collaborate with users through prompts and feedback. This layer would also enable self-learning and self-optimization of the AI systems based on their performance and outcomes.

The interconnected neural layer would be the new frontier of AI innovation and value creation. It will allow data-centric generative models to become proficient in creating synthetic data and data labeling necessary for creating vanilla-generated or creative applications. This can be achieved without violating the tenets of classical data management, governance, and security. It also allows organizations to harness the diversity and richness of data and knowledge across different domains and disciplines, shaping the future of gen AI.

The way ahead

In the long term, organizations should use prompt engineering to enhance existing generative solutions. They must go beyond pre-trained models and explore new ways of creating and using AI. However, generative AI is not the end goal. It is a stepping stone toward what we call emergent AI, which is not predefined or programmed by humans. It is an AI cluster that emerges from the collective intelligence and behavior of multiple generative models, leading to better ambient, adaptive, and predictive intelligence.

The future of generative AI is not about pre-trained models alone, as most of the world perceives it. It is about how organizations use them productively, improve them, and create new forms of AI that can emerge from them. While today, it is more about prompt engineering, the way ahead would be data-centric and emergent intelligence.

More from Sunil Agrawal

Back in the day, artificial intelligence (AI) was deployed over a single centralized system…

Technology LandscapeIn today’s world, software code captures the distinct business requirements…

Latest Blogs

he supply chain is a network of suppliers, factories, logistics, warehouses, distributers and…

Introduction What if training powerful AI models didn’t have to be slow, expensive, or data-hungry?…

Pharmaceutical marketing has evolved significantly with digital platforms, but strict regulations…

Leveraging the right cloud technology with appropriate strategies can lead to significant cost…