Language of Choice for Serverless and Containerized Applications in AWS

Many of our customers are modernizing their platforms from legacy stacks to micro-service-based architecture in Amazon Web Services (AWS). The source technology is as varied as Dot Net, COBOL, older versions of Java, etc. We consider different aspects like target application architecture (K8-based or aws serverless applications like Lambda or Elastic Container Service (ECS) with Fargate) and target languages (e.g., different frameworks of Java, NodeJS, Python, Dot Net, etc.) to come up with the proposed solution which operates on optimal cost and still becomes scalable. This article outlines possible approaches for identifying such target technology stack by application architects.

Technical considerations for serverless adoption

The major criteria for selecting a proposed technology stack are as below:

- Nature of transactions

- Uniform throughout the day, with a smooth and lean curve for any scale in and out. For example is an insurance premium payment application, where policyholders pay the premiums a few days around the policy issue date each year. Since different customers purchase policies evenly throughout the year, the premiums are also evenly distributed.

- High and sudden spikes.

- Number of external application integrations in a transaction life cycle.

- Response time requirements

- Complexity of computation logic

- Technology stack affinity of customers

Let us see how each of these attributes impacts the technology selection process.

Nature of transactions:

Uniform transaction frequency pattern, as well as very high concurrency, is better addressed by ECS, and Elastic Kubernetes Services (EKS), where abrupt scale-out (for example, 100 transactions per second increase within a second) and scale-in are rare. Such abrupt scale in and outs can be addressed by calculated overprovisioning, without any performance impact. Short-lived transactions and spikes in the pattern are better addressed by AWS Lambda, where the cost is governed by the number of requests and the GB-sec parameters and the concurrency is lower than 1000 across all functions in a region

AWS Lambda provides much more cost-effective deployment for applications with high and sudden spikes during the daytime, and very limited requests during the lean period, where the peak transaction concurrency is moderate to low. Application startup and execution must be extremely fast to leverage the cost benefits without any performance impact in any containerized application deployment.

Applications that require integration of multiple external APIs will be faster if the connections are cached and reused across multiple transactions. In Lambda, the caching may not have much impact, as the instances will get terminated after a request. Also, warm instances add to the cost. Each connection creation to external applications increases response time. Hence, ECS or EKS-based deployments will have better response time over AWS Lambda for many requests for such scenarios.

Tech-stack affinity often decides the target technology. For example, if a customer prefers .Net, ECS is a better option, as Lambda will have a poor cold start. ECS with Fargate becomes the default choice if a containerized deployment and operational ease of serverless stack is preferred. Java may be the obvious choice if there is a requirement for complex computations and fast processing with parallel processing. Similarly, if the application is already in Java (say monolith architecture), it is better to use Java as a technology stack instead of migrating to Python or NodeJS. This helps to reuse some of the business logic codes with minimal change.

When deploying a Java microservice in AWS Lambda, the cold start becomes a key consideration, as frameworks like Spring Boot require around 10 seconds to start a container. Similarly, this impacts the fast scale-out requirements.

New contenders: Quarkus & Micronut

As mentioned in the section above, this limitation for using Java in Serverless application models has been largely overcome by lightweight Java frameworks like Micronaut and Quarkus. When these are combined with GraalVM to generate a native image, the performance and the start-up time become much faster. The GraalVM community edition is free for commercial use. Its Ahead-of-time (AOT) compiler generates optimized machine code specific to a target platform. This helps in achieving a fast start-up time and low memory footprint.

Both Micronaut and Quarkus have well-developed library support for AWS service integrations and reactive invocation of RDS, Kafka, and Redis clients. By default, these two frameworks do not support reflection to handle object serialization and deserialization. Micronaut has @Introspected, whereas Quarkus have @RegisterForReflection to address this.

Evaluation/comparison methodology

To compare the performances with the common Java frameworks with NodeJS, we developed a simple CRUD application with RDS for PostgreSQL, keeping the database credentials encrypted in the SSM parameter store using these stacks. The applications were deployed in AWS Lambda in a private subnet, using VPC endpoints to connect to System Managers Agent (SSM). The observation was as below:

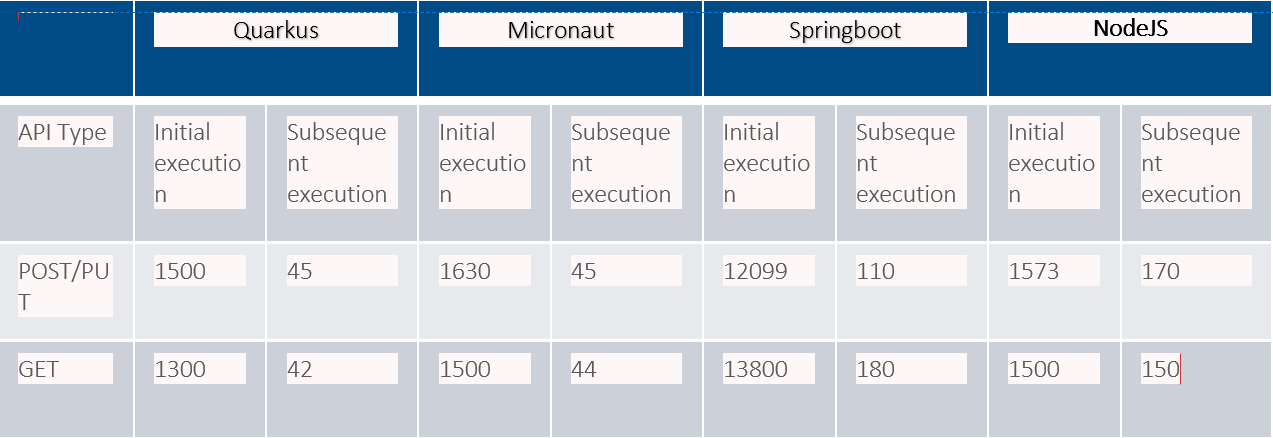

- All figures are in milliseconds in the following table. The response time is measured at the API gateway.

- Lambdas are configured with 1024 MB RAM.

- The execution time figures are an average of 100 subsequent executions.

- Springboot (Java 11), Quarkus (in GraalVM with Java 11), Micronaut (in GraalVM with Java 11), and NodeJS (version 16) were used for the comparison.

Table 1: Performance metrics comparison tech stacks

Observations

- In the Lambda instance creation, the SSM connection added some latency in all the stacks.

- Quarkus and Micronaut in GraalVM have similar performances.

- Considering its high start-up time, spring boot should not be considered for Function-as-a-Service (FAAS).

- NodeJs has a similar start-up time compared to Micronaut and Quarkus.

- The average execution time in NodeJs is a bit more than Quarkus or Micronaut.

- Though at lower memories like 512 MB, the performances of java-based runtimes are not that much better, at 1024 MB, the performance is remarkably good.

Creating a native image with Quarkus and Micronaut

Both Quarkus and Micronaut can create native images without installing Graal VM in the local machine. The corresponding build commands for images to be deployed in AWS Lambda are as below:

Micronaut:

./mvnw package -Dpackaging=docker-native -Dmicronaut.runtime=lambda -Pgraalvm

Quarkus:

./mvnw package -Pnative -Dnative-image.docker-build=true

The above requires the docker runtime and Java 11+ to be available in the build machine. Both frameworks have different mechanisms to enable the runtime image to understand the Lambda handler, such that the handler name is not to be explicitly configured in Lambda. Micronaut has the following in its pom.xml:

lambda

com.ltimindtree.FunctionLambdaRuntime

Quarkus requires annotating its RequestHandler with a name, as below:

@Named("myLambda") // myLambda is the name for this example

This RequestHandler is configured in the application.properties using the following:

quarkus.lambda.handler = myLambda

Both frameworks create a zip file to be uploaded to AWS Lambda as a code source.

Details of the configurations are in the links in the References section of this blog.

Limitations of using Quarkus & Micronaut in AWS Lambda

Some technology owners may feel better seeing the application code in AWS Management Console, as that may help to analyze during troubleshooting activities. In that perspective, NodeJs or Python has an edge. Still, ideally, the code should be committed to the source control tool like git before creating and deploying a build.

Also, the Java-based images (jar/zip files) are larger than that generated from NodeJs or Python. Hence, data transfer during the deployment may take a little longer time.

Conclusion

The cold start in Java in the context of serverless applications model is no longer there with GraalVM and lightweight frameworks like Quarkus and Micronaut. If the existing source code is in Java, be it a monolith application, using Java as the target platform is suggested, as the conversion will be easier and faster. These new frameworks mainly require the addition of the wrapper and dependencies to use Netty as the default embedded HTTP server. Netty is a high-performance, non-blocking I/O-based application framework, that helps to achieve scalability. In addition to this, some framework-related annotations are recommended for Cloud-native service integrations and integration with regular RDBMS, Apache Kafka, Redis, etc.

Since the frameworks, when deployed as GraalVM image, have excellent processing speed, we can even think of converting even the NodeJs or Python-based code to Java, in case high performance and scalability are vital criteria.

References

https://guides.micronaut.io/latest/mn-serverless-function-aws-lambda-graalvm-maven-java.html

https://quarkus.io/guides/

https://github.com/aws-samples/aws-quarkus-demo

https://github.com/quarkusio/quarkus/blob/main/docs/src/main/asciidoc/amazon-lambda.adoc

https://quarkus.io/guides/building-native-image#container-runtime

Latest Blogs

Core banking platforms like Temenos Transact, FIS® Systematics, Fiserv DNA, Thought Machine,…

We are at a turning point for healthcare. The complexity of healthcare systems, strict regulations,…

Clinical trials evaluate the efficacy and safety of a new drug before it comes into the market.…

Introduction In the upstream oil and gas industry, drilling each well is a high-cost, high-risk…