Getting the Maximum Value from your Snowflake Data Cloud Investment

Harnessing the immense power of the cloud with the Snowflake’s platform.

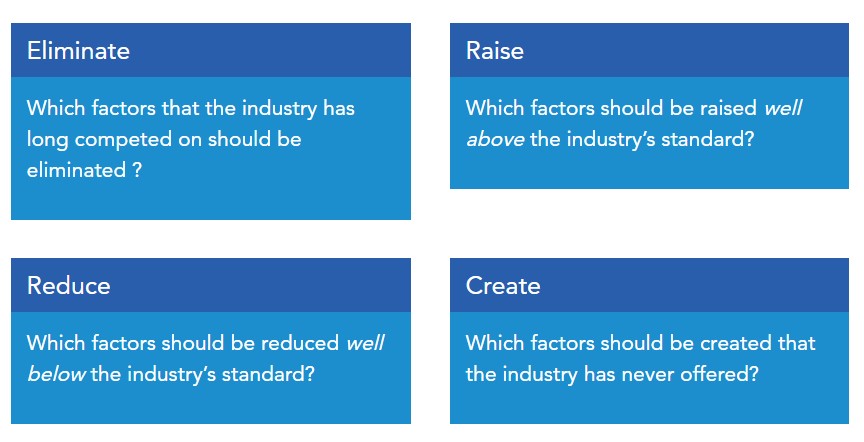

When I was growing up, I watched Michael Porter, the most famous strategic thinker of our times, saying on television that firms could only pursue one of these two ways of competing: Low cost or differentiation. When I went to business school later, I was in awe of W. Chan Kim and Renée Mauborgne, who had challenged Porter in their book Blue Ocean Strategy. It was about the simultaneous pursuit of differentiation and low cost to create new markets using the ERRC (Eliminate, Reduce, Raise, and Create) framework shown below:

After business school, when I joined the IT industry, I did not have the chance to work with or watch closely a firm that effectively employed the Blue Ocean strategy. In 2015, Snowflake launched a cloud data platform for analytical and big data processing with a simple value proposition: Better performance at scale with significantly lower cost. How did they do it?

They eliminated the tight coupling of compute and storage for big data processing, raised the standards of performance at scale by leveraging the cloud’s unlimited resources, reduced the need for highly skilled professionals to write complex code to convert big data into value (through a familiar and easy to use lingua franca- the SQL) and in managing complex infrastructures (through self-provisioning and healing features), and created new features hitherto unknown in the industry like Secure Data Sharing and Data Marketplace. Snowflake created the network effect with the Snowflake Data Cloud, where organizations have seamless access to explore, share, and unlock the true value of their data.

The result: Snowflake gained huge momentum in the market, and today they have about 4000 plus customers using Snowflake Data Cloud. With the ease of use and lower costs, Snowflake has truly democratized data and made it possible for every organization, big or small, to be data-driven.

As a Data Cloud, Snowflake can be considered analogous to a source of unlimited water that can be drawn out by anyone, and one pays only to the extent of what is drawn out (consumption-based pricing model). Some may use it for irrigation, some for generating electricity, some for providing clean drinking water, some for fighting fires, and so on. It is up to the drawer what he uses the water for and how efficiently he uses it to realize the maximum value of his investment.

Larsen and Toubro InfoTech (LTIMindtree) is an elite Service partner of Snowflake. It has been responsible for implementing Snowflake across multiple customers and helping them migrate existing workloads to Snowflake. In doing so, LTIMindtree has combined its domain knowledge of working with these customers (for many years) along with Snowflake experience and expertise into a proprietary framework of processes, tools, and accelerators to help its customers leverage maximum value from Snowflake investments.

A Framework for Maximizing Value from Your Snowflake Investments

Snowflake provides enormous power to its users. In the past, big data analytical workloads would often be constrained by the ability to scale up compute and storage resources. Customers had to manage a fixed amount of capacity. This is no longer the case now, as unlimited resources can be provisioned and made available in seconds with Snowflake. Accidentally deleting data was a nightmare in the past. With Snowflake features such as time travel, users of Snowflake can go back in history (upto 90 days) and can restore data that was corrupted or accidentally deleted. The power and capabilities in the hands of users increased more than a hundredfold after switching to Snowflake. With power responsibility and accountability must come.

Moving from a capacity model to a cost-effective consumption model enforces a shift in the way the users interact with the system. The only limits now are customer imagination and their budget. At Larsen and Toubro InfoTech, while working with customers during the early days of their Snowflake journey, we observed several scenarios where Snowflake was not being used optimally. One of the customer truncated and loaded data into a database table daily while time travel was enabled for 90 days. What this means in crude terms is on every load the table is deleted and reloaded with all the data. This table alone occupied 90 times storage space as compared to its current size without having that requirement from the business. Another customer was running a query for several hours, and the query failed at the end because of poor design. The query was consuming resources without providing any value.

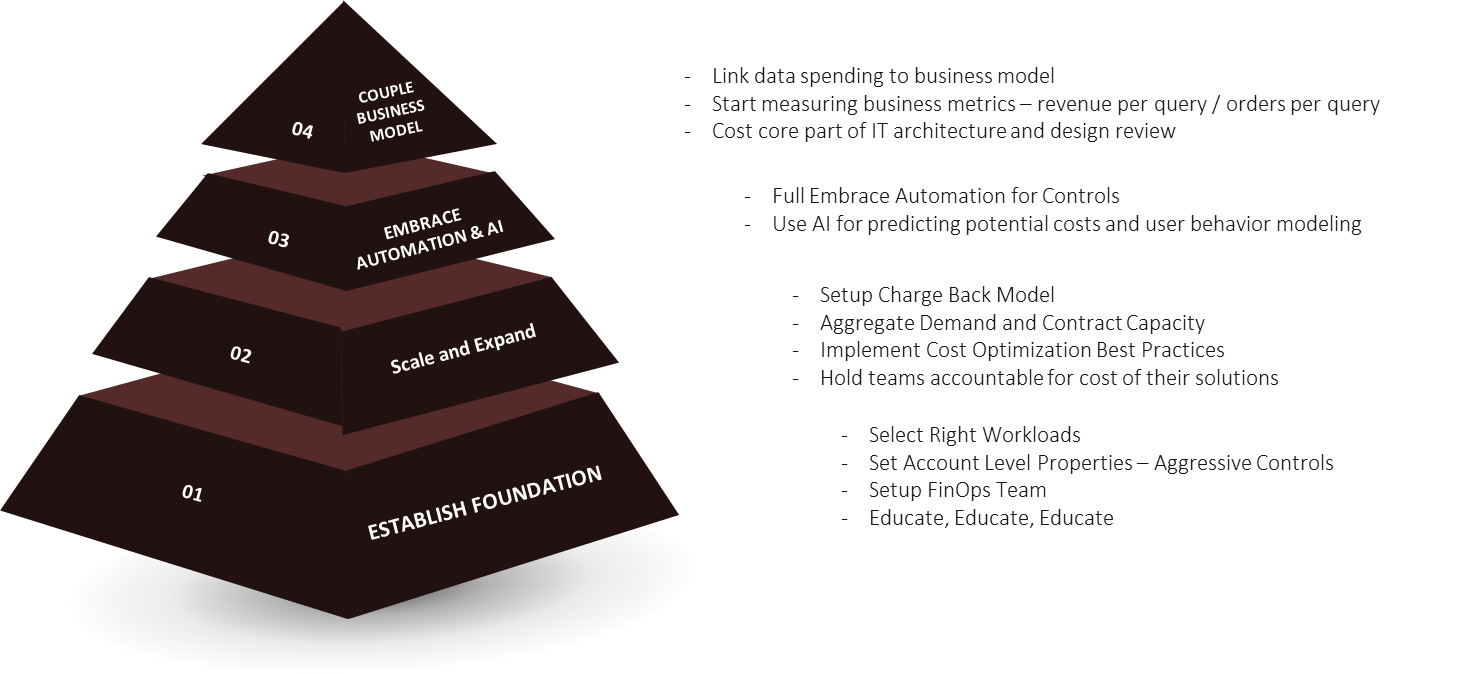

LTIMindtree, as a Global Service Partner for Snowflake, has created the framework and tools to make sure that any dollar spent with Snowflake is aligned with business results. This is available through Canvas Polar Sled FinOps. It integrates controls, best practices, machine learning, automation, and cost mapping to get the most value for Snowflake investment.

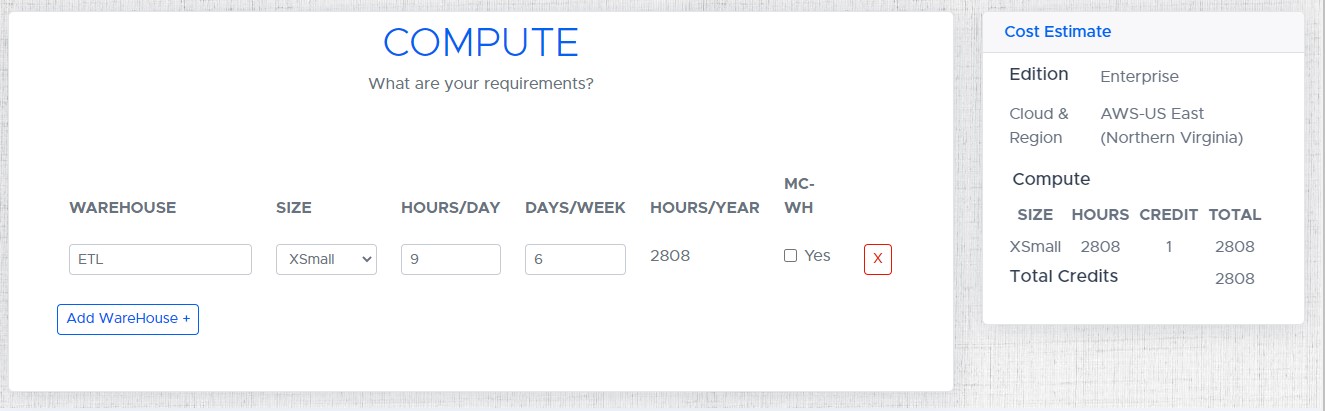

At the very bottom of the pyramid is the setting up of controls to avoid unnecessary expenditures. This can be done by aligning and training users with how best to use Snowflake according to the recommended practices prescribed by Snowflake and our known-how. For example, before migration to Snowflake for a workload, say loading data, we provide a cost calculator to calculate the annual compute credits for that workload as shown below. This is based on a simplistic assumption that a warehouse of size XS will run for nine hours a day, six days a week and consume 2808 credits annually.

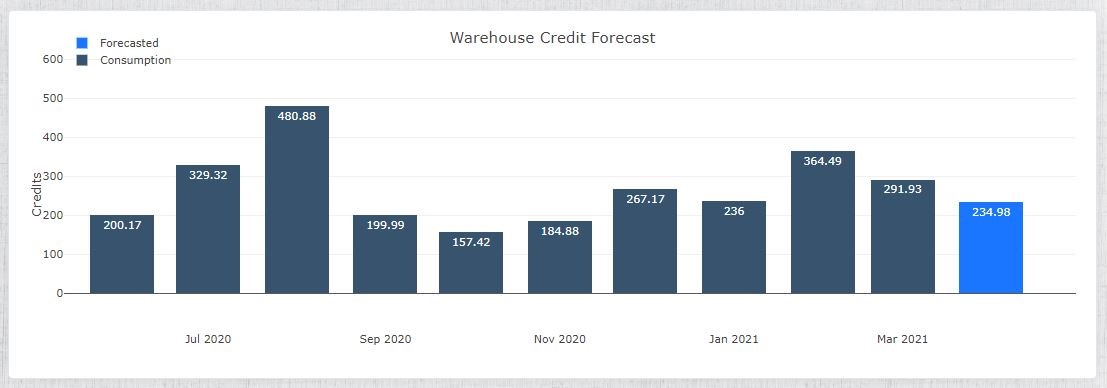

We then use the telemetry data provided by Snowflake and show the actual consumption as shown in the below diagram post migration

In this diagram, for the first nine months, i.e., from June 2020 to March 2021, the system shows the actual consumption month on month. Against the initial estimate of 2800 credits for the year, about 2700 credits have already been consumed in the first 10 months of usage. By deploying machine learning on top of the actual consumption of the past period, we now help in forecasting future consumption better, instead of relying on a simplistic model of an initial estimate we created at the start. The future versions of FinOps will also help to predict storage use similarly; particularly relevant when time travel is involved. This helps the users to be always conscious of their consumption- what they estimated at the start vs. what it was later and how the system helps them make better forecasts for the future.

The next step in the pyramid is to not just be conscious of your usage but to also be accountable for your actions. Some organizations implement a charge back model to recover from individual departments/lines of businesses the cost of actual Snowflake usage. At this stage, whether you charge back or not, it is essential to ensure accountability among users organization-wide. With thousands of queries running, understanding how the users are running those queries and identifying any outlier may save some unnecessary costs.

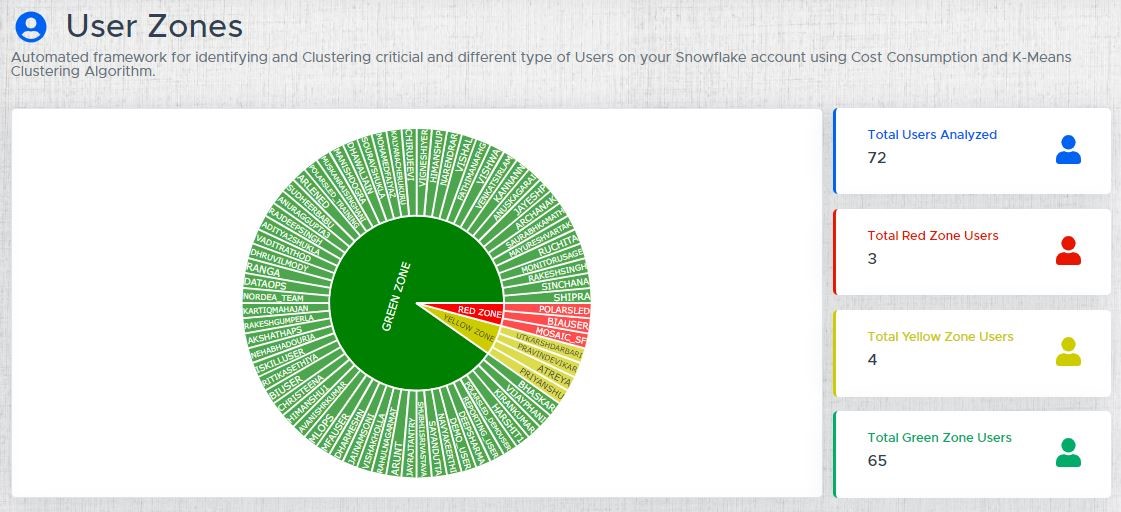

FinOps is able to automatically cluster users by their platform usage and identify areas that would need some investigation. In the example below, we can clearly identify the group of users that would need some analysis. Just clicking on any of those users FinOps will bring us to the root cause of those anomalies.

Further efficiencies can be squeezed out from the system by embracing automation to automatically implement control mechanisms without manual interventions. For example, in the above case, rules can be created to automatically disable the usage rights on a compute resource for a user or application without the account admin taking manual action.

While the first three levels of the pyramid help to increase the efficiency of your Snowflake usage, the last level at the top is focused on effectiveness – i.e., focus on whether you are doing the right things. The ultimate measure of effectiveness is the value you derive vs. the cost spent.

LTIMindtree customers can get insights into metrics like revenue per query when using FinOps. One of our manufacturing customers uses Snowflake to collect IOT data from all their devices. They also get all other product and customer-related information in different systems into Snowflake. With all the information on one platform, they can deploy Snowflake’s unlimited compute and storage capabilities to get insights from this data as quickly as possible. They can improve their product design and give the right advice to their customers based on usage observed across other customers. This helped them transform from being a product-centric organization to a customer-centric one, which led to increased revenue and market share. The queries that they used to analyze this data directly resulted in top-line and market share growth for the product.

LTIMindtree has combined all its experience and knowledge into Canvas Polar Sled FinOps. At LTIMindtree, we can provide better services by providing automation and Artificial Intelligence that translates into better quality at a lower cost. If you are interested in knowing more, please talk to us.

The author Sumukh Guruprasad is a Snowflake Evangelist for LTIMindtree and its customers and can be reached at Sumukh.guruprasad@lntinfotech.com

More from Sumukh Guruprasad

In a mall, all shops contribute to its overall footfall. This is called the network effect.…

Latest Blogs

The energy and anticipation were evident even before entering the arena, and attendees had…

A tectonic shift in wealth is underway, and agility is the key factor that will distinguish…

Educational institutions are at a crossroads where their future hinges on a single question:…

In times of market unpredictability, alternative investments offer a valuable advantage by…