Creating Scalable Knowledge in Software Delivery Life Cycle (SDLC) through Ontology

In today’s business world, software plays a vital role. To ensure that software is developed and delivered effectively, it is important to have a well-defined process in place. This process is called the Software Delivery Life Cycle (SDLC).

The diverse tools supporting the SDLC create a large amount of data, often isolated in the SDLC’s process or phase context. The primary focus of this blog is to highlight the importance of utilizing large datasets throughout the SDLC. One of the outcomes of mapping across the SDLC is to enable scalable knowledge creation. This knowledge can be used to diagnose software errors and architecture or design flaws that can be made visible. This then drives us to ontology.

What is Ontology?

Ontology is a way to add order/describe “relations” between objects, which help organize vast amounts of data – e.g., Wikipedia, Google Search, etc.

An ontology is a formal way of describing how things are related. In the context of SDLC, ontology can be used to define the relationships between the different phases of the SDLC. The origin of ontology is interesting. It comes from philosophy and the study of what is real and what isn’t.

Why is ontology so important?

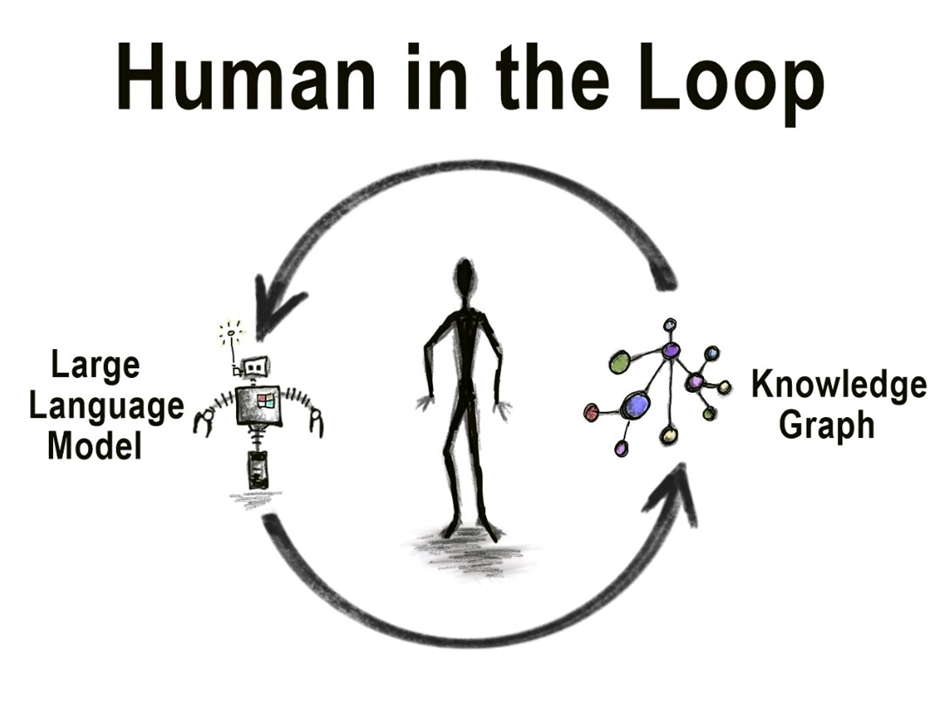

Ontology is a critical way to structure the world to help machines understand what and how we humans classify data. Human-engineered ontologies enable people to impart their cognitive patterns onto machines. We must embed human values and concerns into the feedback loop, transforming AI based on the SDLC. This will create a closed-loop transfer function with measurable outcomes based on knowledge graphs. It will also establish responsible AI guardrails to enhance overall productivity within the SDLC.

Since the internet makes information accessible to everyone, it must be structured clearly. This information must be usable by different human types (languages, skills) and machines. This entails determining the human angle in developing ontologies.

How important is human involvement in building ontologies?

One of the essential aspects of Machine Learning (ML) is the ability to group data and create a machine-oriented SDLC ontology. The opportunity and challenge are the human interpretability (deep learning and neural network) of the output of such a system. Another challenge is to find ways to coordinate with such procedures as a function of human representation which is due to the human perception of reality. Therefore, it is essential to build on unstructured data.

Figure 1: Human in the loop, Tony Seale, LinkedIn

Human perception of reality

Humans tend to create a shared truth, and deep AI algorithms need high accuracy to mimic it. Comparing the human approach to establishing the gold standard of human ontology with machine-generated outcomes is always intriguing.

Here are different approaches to arrive at a common ground truth:

Common interpretation through common agreement

For example, imagine a simple captcha image where humans can read distorted characters. Can we broaden how humans read and type these distorted characters to digitize books?

Computers require the ability to interpret words that are comparable to those understood by humans. We got to the common ground truth by having different people agree with each other’s interpretation. If ten people agree with the same captcha image, it becomes a common ground truth.

Common interpretation through maximum consensus

Let’s consider the translation of languages. For example, anyone can attempt to learn a language in an app called Duolingo by knowing just “English.” The ability to do justice to the meaning and the grammar is a difficult proposition. The more complex the data, the more difficult it is to establish a common truth. In this case, one approach could be to vote on each other’s translations and determine which is good or better.

Common interpretation using specific interventions at scale

In specific domains like medical/healthcare, the power of building a suitable ontology is to leverage and augment for scalability. Something that we can let the machines do.

We would still need a way to enable diverse stakeholders, including machines and humans, to understand the same through open systems. This would allow multiple human experts to create the validation and scale at the n-1 layer, leveraging ML/AI and automation.

Common interpretation using open, international classification

For many emerging areas, especially for SDLC, we could leverage the story of the internet to create an international classification system. This system would merge knowledge into a single source of truth for a group of people. Such a collective classification can also provide a standardized set of reference cases – how individuals and collective groups – are compared to a given value.

The above approaches indicate that a majority result is often right and that the minority could be singled out. We can only grasp the knowledge held by various experts/participants through reference cases.

Understanding the SDLC ontology approach

Ultimately, what can be considered as a set? It is an approximation that asymptotes towards reality. We should never think about 100% factual accuracy. We can gravitate to an approximate based on more sources of information and more agents interacting around the sources of information across the SDLC. It’s not only about understanding the problem, but also about understanding the agent. Our preferences are often delegated to agents that represent a digitized system. This is done on the subsets of issues accordingly in those patterns.

In SDLC, human knowledge spans various areas, with human involvement being the key to solving specific issues within an area more proficiently than in others. This knowledge can be delegated to a system that optimizes through agents who know better. Occasionally, we have to give the developer the answers we already know to assign weights and then correct those accurately and efficiently. This is collective intelligence.

The collective intelligence within SDLC is influenced by a fundamental human need – motivation, also referred to as incentivization.

What motivates or incentivizes individuals to contribute towards a better SDLC ontology?

The way to incentivize developers/testers/product owners/scrum masters to contribute towards a better SDLC ontology and the resulting outcome is through the following reward mechanisms.

- Monetary incentives

- The problem itself seems challenging thus capturing the teams’ curiosity

- Knowledge sharing

- Building social significance

- Desire to contribute

- Gamified elements

- Loyalty points rewards

Using emerging technology like distributed decentralized systems and blockchain, and compensating with tokens, to use ontologies as prediction markers looks promising.

Conclusion

The ultimate goal is to understand how software developers/SDLC personas make decisions and how they think about the decisions. By encoding this information in a cost-effective manner, we can broaden access for underserved developers in the community. This would involve creating a system that provides affordable access to knowledge and is scalable. Developers are not being replaced but provided with affordable tools that extend their capacity to develop and increase productivity.

Every person (developers, testers, analysts) who touches the code should answer the same question ─ How do I create scalable knowledge?

More from Shivaramakrishnan Iyer

AI and Machine Learning aided software development, is it a norm or an extravaganza? Is this…

Quantum has emerged as a buzzword among IT experts and fresh graduates alike. As the world…

The sight of an autonomous vehicle with cameras and real-time feedback roaming within the Google…

It’s no surprise that the soon-to-be implemented 5G network, platforms and solutions will…

Latest Blogs

Core banking platforms like Temenos Transact, FIS® Systematics, Fiserv DNA, Thought Machine,…

We are at a turning point for healthcare. The complexity of healthcare systems, strict regulations,…

Clinical trials evaluate the efficacy and safety of a new drug before it comes into the market.…

Introduction In the upstream oil and gas industry, drilling each well is a high-cost, high-risk…