Assuring Availability, Scalability, And Reliability Of IoT Applications

According to IDC research, 41.6 billion connected devices will be used worldwide by 2025. At a conceptual level, the Internet of Things (IoT) integrates such devices into a network of “things” – sensors, applications, databases, other networks, and technologies. Its primary purpose is connecting and exchanging data between devices and systems across the spectrum. Such solutions find their usage potential in multiple verticals. Some of them are:

- Healthcare: For Important use cases such as monitoring glucose levels and heart rates and alerting caregivers in case of a breach.

- Manufacturing: Collect data from equipment, analyze metrics, anticipate outcomes, find anomalies, and provide alerts to protect workers and machinery from failures and downtime.

- Energy: Monitor solar and wind energy generation and help detect any failure or abnormal decrease in energy efficiency.

For an IoT application, the performance metrics, such as how fast it responds to a high volume of data and accuracy, are its critical success factors. Therefore, performance testing and engineering of IoT applications are essential for identifying bottlenecks and ensuring that IoT applications function properly under all loads and network conditions. For a successful IoT application, the performance of its UI layer under real-time network conditions must be validated proactively to ensure and enhance the end-user experience. If the performance of IoT applications is compromised, personnel safety may be jeopardized, especially in sectors such as healthcare and manufacturing.

IoT performance testing and engineering is complex and differs from traditional web and mobile application performance testing. This is due to the lack of standard protocols for IoT device communication, diversity in IoT applications, devices, and platforms, fast-moving, and a large volume of data straining the infrastructure.

This blog will outline the approach for conducting IoT performance testing and engineering, the challenges involved, and how to overcome them.

How is Performance Testing and Engineering for IoT Applications different from Web Applications?

Multiple interconnected field devices of different models and hardware, including IoT sensors, connectivity modules, devices, networks, cloud-based applications, security systems, and data analytics systems, make up an IoT application ecosystem. On the other hand, web applications don’t have such complex ecosystems.

The below table lays out the differences based on various parameters.

| Parameters | Web Application | IoT Application |

| Communication Protocol | HTTP/HTTPS, FTP, SMTP, JDBC, etc. | MQTT, CoAP, AMQP, UDP, XMPP, HTTP, etc. |

| Types of Workloads Considered | Usually, the user-initiated workload is considered | Device-initiated & IoT platform-initiated workloads are considered |

| Simulation | End-user behavior simulation | Devices’ & sensors’ behavior simulation using MATLAB, IoTIFY, IBM Bluemix (now IBM Cloud), etc. |

| Performance Test Coverage | Application layer | Physical, network, transport & application layers |

| Big Data & BI | Not all web applications require performance analysis of big data processing & data analytics systems | Performance analysis of huge volumes of data from IoT devices are analyzed using big data, BI, and data analytics systems for intelligent decision-making in all IoT applications |

| Requests/Responses | Users create the requests and receive the responses | IoT devices create requests, receive responses, receive requests, and provide responses to those requests |

Table 1: Difference between Web Application and IoT Application Performance Testing and Engineering

Challenges in IoT Application Performance Testing and Engineering

Based on the differences mentioned above, the following are the challenges faced during performance testing and engineering for IoT applications:

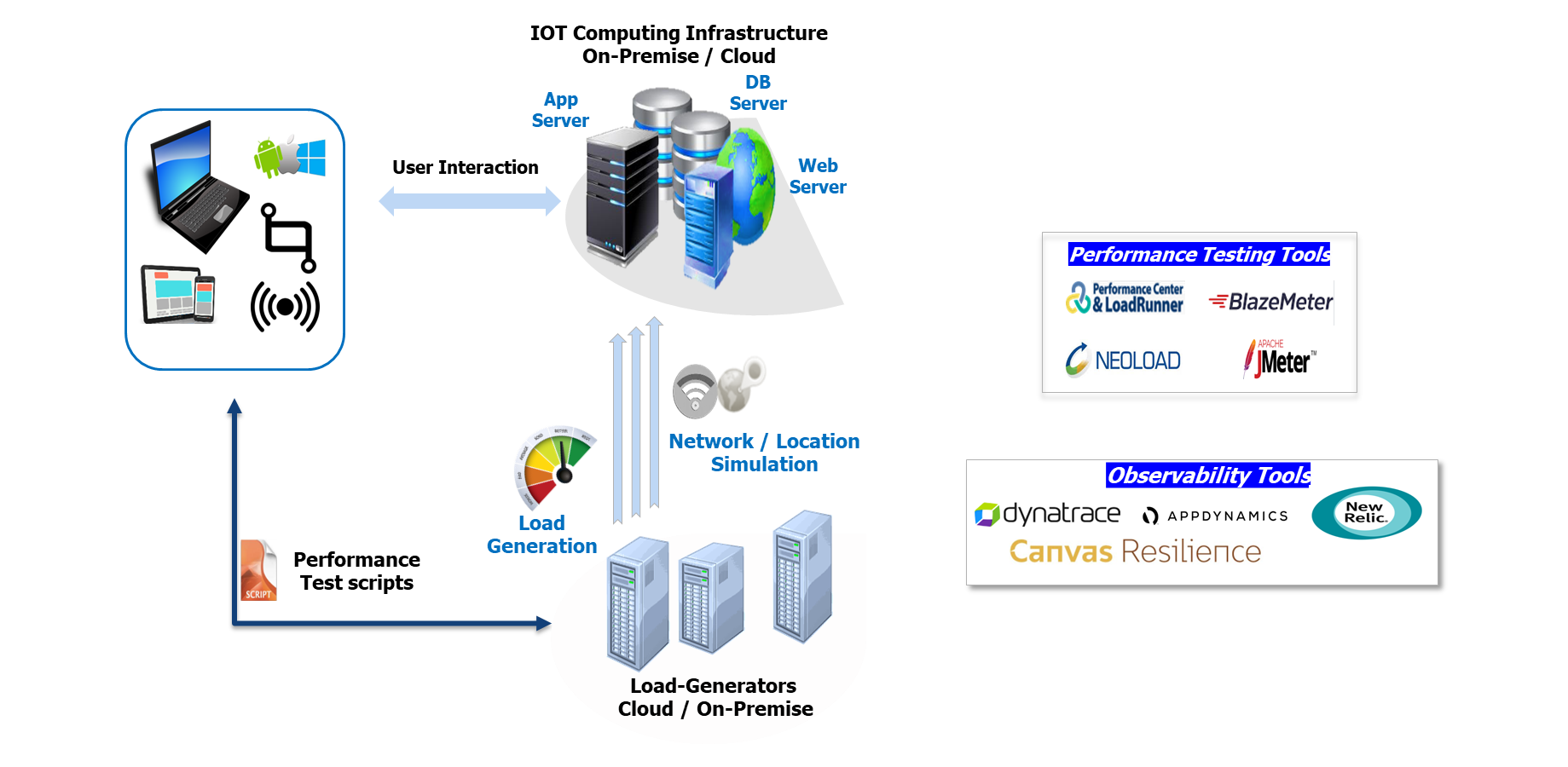

- A performance testing and engineering tool that supports IoT protocols is required. Not all tools support all the protocols; hence finalizing the right tool is necessary.

- IoT device usage patterns and continuous workload levels need to be replicated with the help of tools.

- There is a need for infrastructure to generate high-load tests to simulate load from millions of devices.

- Measuring local processing power and other performance KPIs on various devices considering diversity in the device types and models.

- Network emulation tools (3G/4G/Bluetooth/Wi-Fi/ZigBee/LoRaWAN etc.) are required to perform realistic network simulation across devices.

- Sophisticated observability tools (Application Performance Management (APM) tools) are needed to analyze the application health and infrastructure health of various server components of IoT architecture.

- An effective test data management strategy is necessary for creating large synthetic data volumes.

- Investments are required for setting up an IoT test lab environment.

Below are some ways in which we can overcome these challenges:

- Before selecting the ideal tool with the desired protocol, the architecture and communication mechanism should be carefully examined.

- The IoT application ecosystem is very broad and complicated, so everything cannot be replicated in the environment.

- IoT application performance testing and engineering demands tremendous load generation and workload simulation, which must be planned entirely in advance. A trade-off must be made between various network and device kinds.

- Industry-standard observability tools can be purchased for end-to-end device monitoring. Observability tools should be linked with IoT performance testing and engineering.

- Network emulation is essential for complete IoT performance testing and engineering, and it needs to be planned early.

Approach for IoT Performance Testing and Engineering

The approach for conducting performance testing and engineering for IoT applications is based on their unique characteristics.

The IoT application ecosystem and protocols used for communication should be thoroughly analyzed before identifying the right testing tool. Based on this, the testing tool which supports the necessary protocols (Message Queuing Telemetry Transport (MQTT), Advanced Messaging Queuing Protocol (AMQP), Constrained Application Protocol (CoAP), HyperText Transfer Protocol (HTTP), etc.) should be selected.

Performance test metrics such as response time, network connectivity and packet loss, message success, and failure should be identified before testing. Tests should be designed to create a geographical workload distribution with varied network conditions.

A realistic workload condition must be used to assess the IoT platform’s performance and scalability properties. Tools for APM and observability should be used to track and identify problems with infrastructure problems and improve IoT application performance to achieve specified Service Level Agreements (SLAs).

LTIMindtree’s in-house observability tool, Canvas Resilience, can monitor the applications. The tool combines chaos engineering, continuous observability, proactive resilience insights, and SRE dashboards to enable business resilience through hardening applications.

Fig. 1: IoT Application performance testing and engineering methodology

Performance testing and engineering methodology for MQTT Protocol testing using the JMeter tool

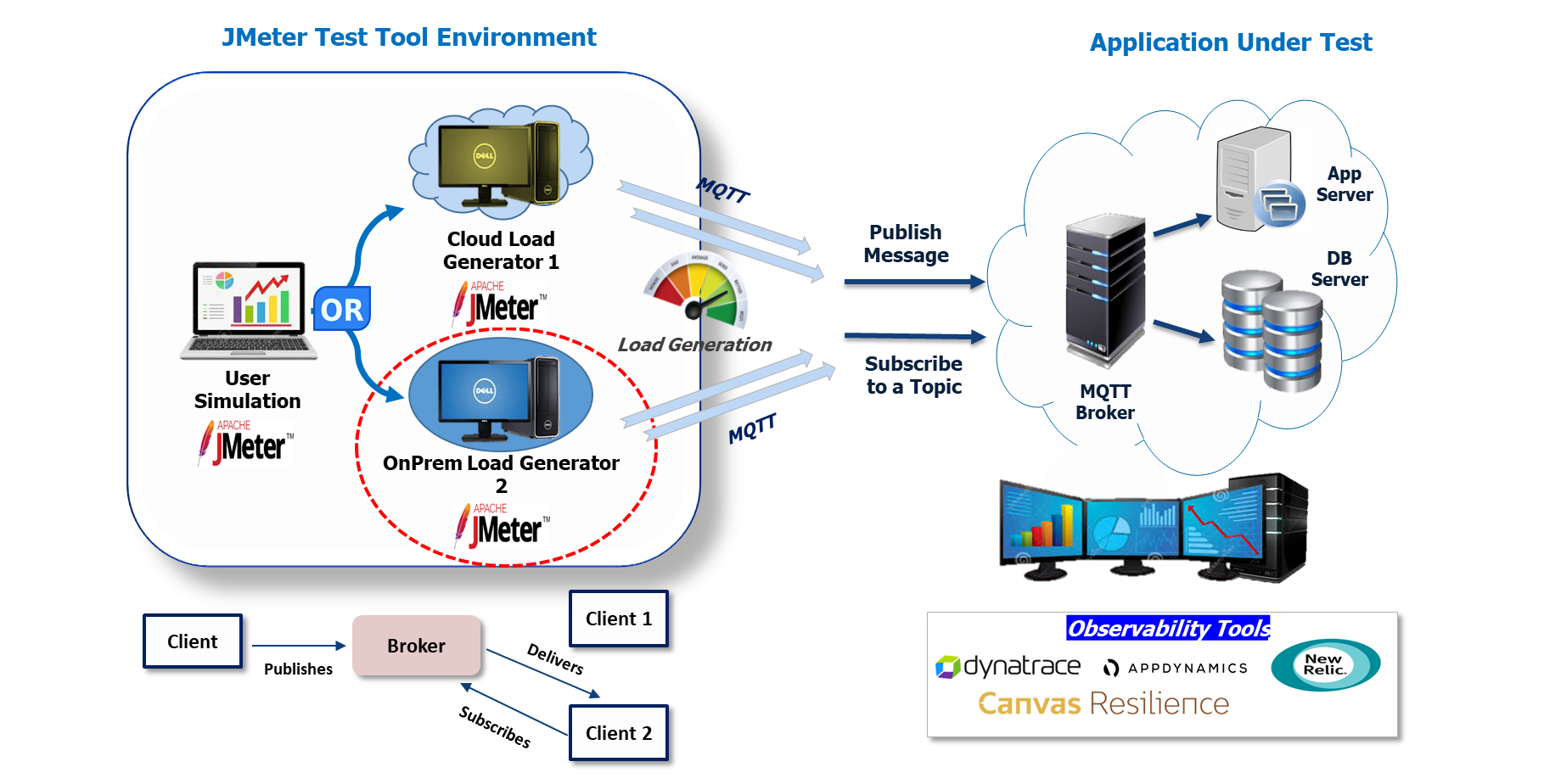

The methodology below uses the MQTT protocol available in Apache JMeter, an open-source tool, to generate the distributed load from on-premise and cloud-based load generators to create a performance benchmark and identify the breakpoint of the MQTT broker.

As shown in the figure below, the application is tested with the MQTT broker-based subscription. For load generation using JMeter, it can be done on-premises, based on master-slave architecture or through the cloud.

Fig. 2: Performance testing and engineering for MQTT protocol using JMeter

As a typical IoT application could have millions of devices, simulation of load on-premises would be very complex, time-consuming, and involve high costs. This would not be the case for traditional applications, where load only needs to be generated for a few thousand users. Hence for IoT applications, cloud-based load generation is recommended.

Apart from JMeter, which supports many protocols, several widely used performance testing and engineering tools, such as Microfocus LoadRunner, Apache JMeter (open source), Tricentis NeoLoad, etc., also support MQTT, HTTP, AMQP, and CoAP protocols. There are specialized IoT performance testing and engineering tools, such as MachNation Tempest, which support MQTT, WebSocket, Modbus, LoRaWAN, and custom protocols.

Most commercial performance testing and engineering tools also include network simulation capabilities. Network simulation is crucial during performance testing and engineering of IoT applications. Simulating packet loss and establishing network latency bandwidth limits is vital since users and devices are connected in the application ecosystem over restricted network connections.

Monitoring IoT applications using APM tools like AppDynamics, Dynatrace, New Relic, Canvas Resilience, etc., is essential to analyze the performance impact of various components. If the application is hosted on the cloud, cloud-native performance monitoring using AWS CloudWatch or Azure Monitor will also help monitor, diagnose, and troubleshoot performance issues.

Conclusion

IoT applications need to be evaluated for their performance and scalability characteristics as they see a surge in use across industries. Performance testing and engineering for IoT applications is complicated and considerably different from those for regular web applications. Although there are many challenges, we can overcome them with the right strategy and approach.

More from Chitrang Negi

Advances in digital technologies such as 5G and IoT and rapid urbanization have made smart…

In recent times, AI models such as ChatGPT, DALLE, Midjourney, and StableDiffusion, have captured…

Latest Blogs

A closer look at Kimi K2 Thinking, an open agentic model that pushes autonomy, security, and…

We live in an era where data drives every strategic shift, fuels every decision, and informs…

The Evolution of Third-Party Risk: When Trust Meets Technology Not long ago, third-party risk…

Today, media and entertainment are changing quickly. The combination of artificial intelligence,…