Stockholm’s Spotlight on AI: Compounding and Agentic AI Transforming Business

Recently, I had the opportunity to attend a Databricks and Snowflake event in Stockholm. It was an eye-opening experience where customers shared their journeys with artificial intelligence, particularly their concepts of Compounding AI and Agentic AI. These concepts are widely debated in public discourse and represent a step forward for AI. In this blog, I’ll delve into the essence of Agentic AI, examining its key features, potential, and how it empowers machines to operate autonomously while making well-informed decisions without constant human oversight.

Acknowledging AI’s limitations

As we explore AI’s capabilities, it is equally important to acknowledge its limitations, particularly when it comes to ChatGPT. It has the remarkable ability to analyze vast amounts of public information and generate new content based on existing knowledge. This feature undoubtedly streamlines numerous tasks and enhances productivity across various sectors. However, two critical challenges were highlighted. First, the issue of correctness or accuracy remains a significant concern. How often can we trust the information AI generates? While the content might appear coherent, errors or misinformation can easily slip through. This raises a pressing question: can we rely on AI for critical applications without robust checks in place?

Second challenge lies in research and development (R&D). At its core, R&D is about breaking new ground and discovering innovations yet to be conceived. But when an AI model merely regurgitates existing knowledge, does it truly contribute to innovation? To fully unlock AI’s transformative potential, we must develop methods that enable it to aid in the creative and exploratory aspects of R&D. Only then can we fully harness the power of AI to drive meaningful advancements in our fields.

How AI works today: Non-Agentic workflow

In today’s landscape, AI agents predominantly function using large language models (LLMs). When users input a prompt, they anticipate a response generated by the AI model. The AI agents run the entire process in a single process harnessing the capabilities of LLMs to deliver comprehensive and coherent outputs based on the initial input provided. This is an efficient and reliable process that delivers great results at high speed, much like ChatGPT, where the entire operation runs seamlessly from start to finish.

Agentic AI workflow with an iterative function

In contrast, Agentic AI offers a more dynamic approach. Unlike the single-process model, it employs multiple AI agents and large language models (LLMs), each with different roles, backgrounds, personas, and tools, working alongside one another and iterating to deliver a task. One LLM handles the initial thinking, another acts as a writer collaborating with a spell checker and grammar checker. A fithth LLM takes care of identifying areas that need revision or further research, while an orchistrator oversees the iteration process, ensuring that the output is refined through several rounds before it is finalized.

This iterative approach mirrors how humans operate. We don’t accomplish everything in a single attempt without thoughtful consideration and planning. Instead, we plan, iterate, and ultimately discover the best solution.

Why Agentic AI delivers better results

The effectiveness of Agentic AI becomes clear when we look at numbers. For instance, the ChataGPT 3.5 model, when used with short prompts, delivers accurate responses only 48% of the time. This means that in situations where concise and straightforward inputs are provided, the model is able to deliver the correct response less than half of the time. In contrast, the ChatGPT 4.0 model fares better with a 67% accuracy rate.

However, when an agentic workflow is applied to ChatGPT 3.5, its performance skyrockets to 95.1% accuracy. This leap demonstrates the power of collaborative workflows and iterative refinement, even with models considered less advanced. It’s a reminder that the right process can transform outcomes, regardless of the tools in use.

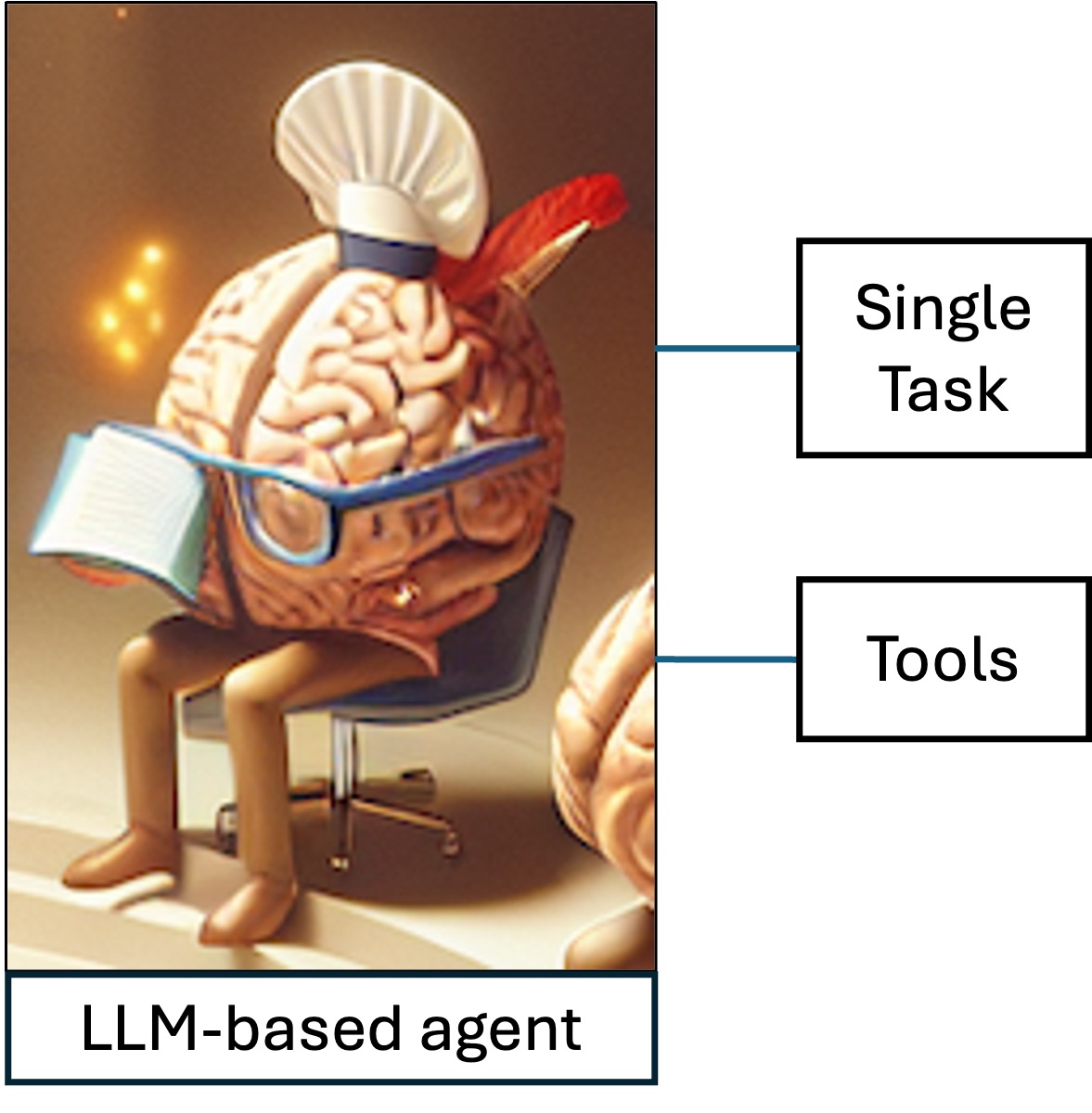

Specialization in AI: Controlling LLM functionality

The LLM-based agent within the workgroup comes equipped with advanced capabilities, designed specifically to enhance productivity and effectiveness. However, it operates with a single focused purpose that shapes its functionality.

The primary goal is to streamline the function of these agents, allowing them to excel at one specific task, which, in turn ensures high efficiency and reliability in their overall performance. This approach not only maximizes their capabilities but also minimizes the potential for errors, as each agent is designed to concentrate exclusively on its designated function.

In essence, the focus is on refining the individual AI agents so that each one can become exceptionally skilled in a single task, making this specialization their primary focus. By doing so, the team can leverage the strengths of each agent, creating a harmonious work environment where specific tasks are handled with precision and expertise. This specialization ultimately contributes to the overall success of the workgroup, as each agent plays a critical role in achieving collective goals.

The role of tools

Another key aspect of Agentic AI is the use of pre-coded tools.These tools provide LLMs with predefined instructions, ensuring consistent results every time, unlike another large language models that might yield varied outputs. Since these tools are pre-existing, there is no need to create them from scratch as programmers might have already developed them for use in their code. Whether it’s through external libraries or API calls, all these resources can now be leveraged by large language models.

The power of reflection

One often overlooked but transformative technique in Agentic AI is reflection. By prompting the large language model to evaluate its previous outputs, or by asking it to identify areas for improvement and provide an enhanced response, we can significantly boost its performance. Though this process may seem simple and obvious, it can lead to much better results from large language models. Isn’t this similar to how we, as humans, learn and grow?

The orchestrator: A central figure

A crucial aspect for the workgroup is the orchestrator (leader agent) whose primary task is to guide the collaboration of AI agents to securely function as a cohesive team. This requires them to actively communicate and engage with one another. Here, it is essential to manage the flow of communication, which necessitates having an orchestrator who is aware of all the AI agents within the group. The responsibility is to coordinate tasks—if one agent encounters a failure, the orchestrator will reallocate responsibilities to ensure that another agent can step in and carry out the analysis that the previous agent could not complete.

Framework for collaboration

Today, there exists multiple advanced frameworks that can be used to manage AI agents, particularly in the context of content generation and collaborative tasks. Two examples are AutoGen and Crew AI with both advanced frameworks serve distinct purposes and cater to different user needs. The Crew AI focuses on ease of use and collaboration, while AutoGen offers deeper integration capabilities for enterprise applications.

These frameworks simplify the orchestration of complex workflows, enhance LLM performance, and make it easier to create advanced applications driven by multi-agent interactions. The result? Sophisticated solutions with minimal effort.

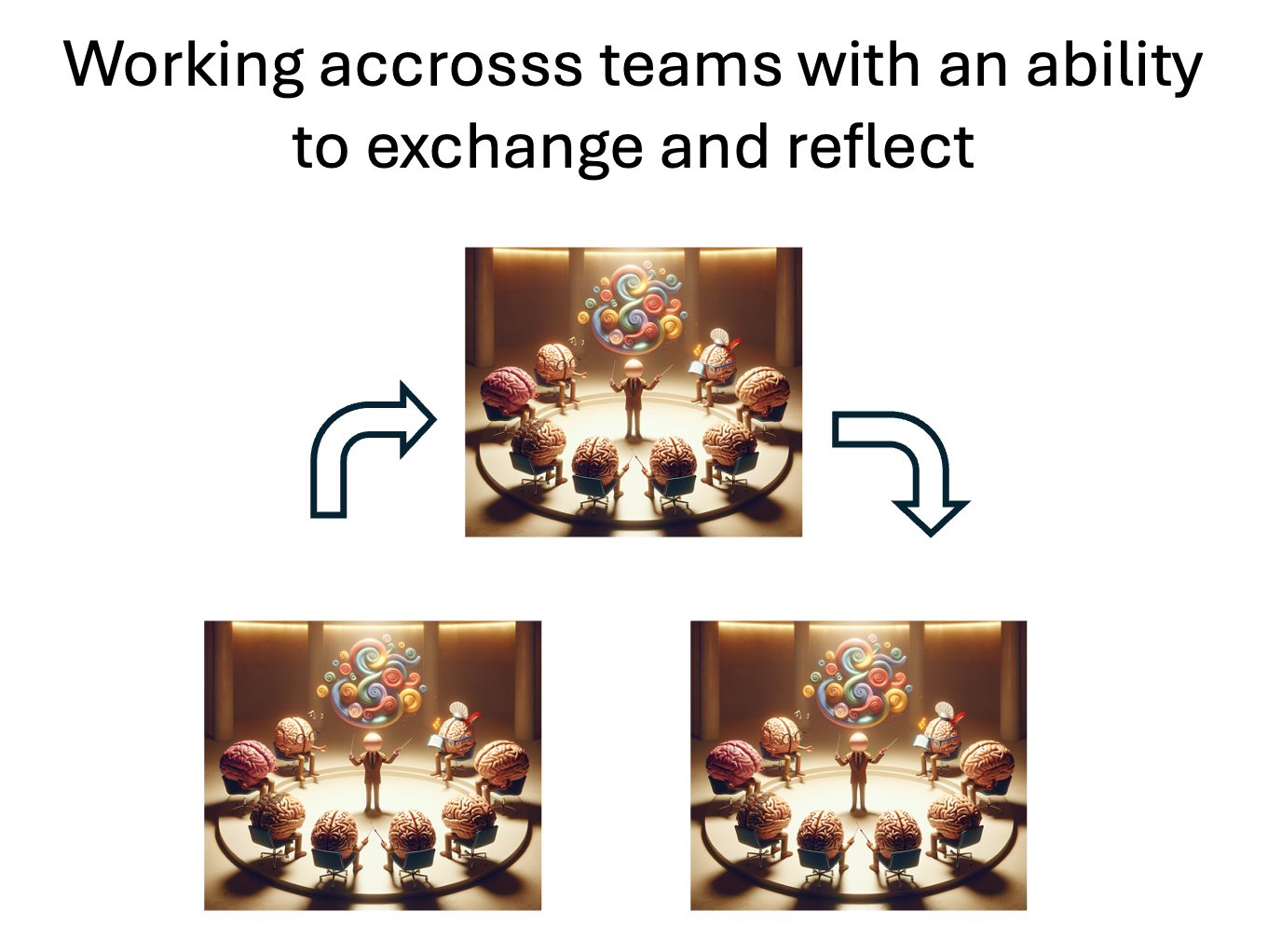

The future of AI: Task-specific workgroups

To add a bit of complexity, it is important to recognize that these workgroups can be task specific. For instance, one workgroup might focus on data analysis, data collection, coding, transforming, and joining tables together before serving the results to a final table.

As this workgroup’s output becomes recognized as an agent by others, there is lesser need to understand the inner workings of that group—you simply care about the results. This allows for the establishment of multiple workgroups: one dedicated to data processing and another focused on data visualization and presentation for end users.

The communication between these groups is crucial. Ultimately, observers are viewing the entire operation from a macro perspective, where multiple AI agents interact seamlessly within the system.

Envisioning the future

How can we harness AI’s potential to drive meaningful impact? While its applications extend beyond content creation and coding, frameworks like Agentic AI and Compounding AI present an opportunity to unlock deeper insights and deliver superior outcomes.

A key focus lies in information retrieval—enhancing the ability to navigate and utilize vast data effectively. The adoption of frameworks such as Agentic AI and Compounding AI plays a pivotal role in this evolution, enabling organizations to extract critical insights and achieve greater results.

For many organizations, transitioning from awareness to the adoption of Agentic AI can feel overwhelming. This journey impacts people, processes, and technology, often requiring the support of an ecosystem or trusted partners to navigate the change successfully.

Reference:

Compound AI Systems: https://www.databricks.com/glossary/compound-ai-systems

LTIMindtree – LTIMindtree AI Technology Trends Radar including Agentic AI

- The document contain use-cases for the Agentic AI

LTIMindtree: Driving Business Efficiency with Generative AI for the Insurance Broker Industry

LTIMindtree: Machine Customers: Transforming Business Interactions and Expectations – Part 2

More from Tom Christensen

Leveraging the right cloud technology with appropriate strategies can lead to significant cost…

It pays to be data driven. Research conducted by Forrester1 reveals that companies advanced…

Welcome to our discussion on responsible AI —a transformative subject that is reshaping technology’s…

At our recent roundtable event in Copenhagen, we hosted engaging discussions on accelerating…

Latest Blogs

Leveraging the right cloud technology with appropriate strategies can lead to significant cost…

Introduction The financial industry drives the global economy, but its exposure to risks has…

On January 17, 2024, the Centers for Medicare and Medicaid Services (CMS) released the Interoperability…

The energy and anticipation were evident even before entering the arena, and attendees had…